From Connection to Cognition

Extending Edge AI capabilities into high-end,

real-time vision processing & analytics

Extending Edge AI capabilities

into high-end, real-time vision

processing & analytics

As enterprise AI moves from centralized cloud to on-premises edge computing, the demand for powerful, flexible, and secure GPU servers grows. ADLINK AXE Series fulfills this need by enabling full AI processing at the edge, delivering real-time insights with maximum privacy—without cloud dependency.

Most AXE Series servers are NVIDIA Certified, guaranteeing reliable, compatible, and optimized performance for AI workloads, having passed rigorous testing for seamless operation, fast deployment, and stability.

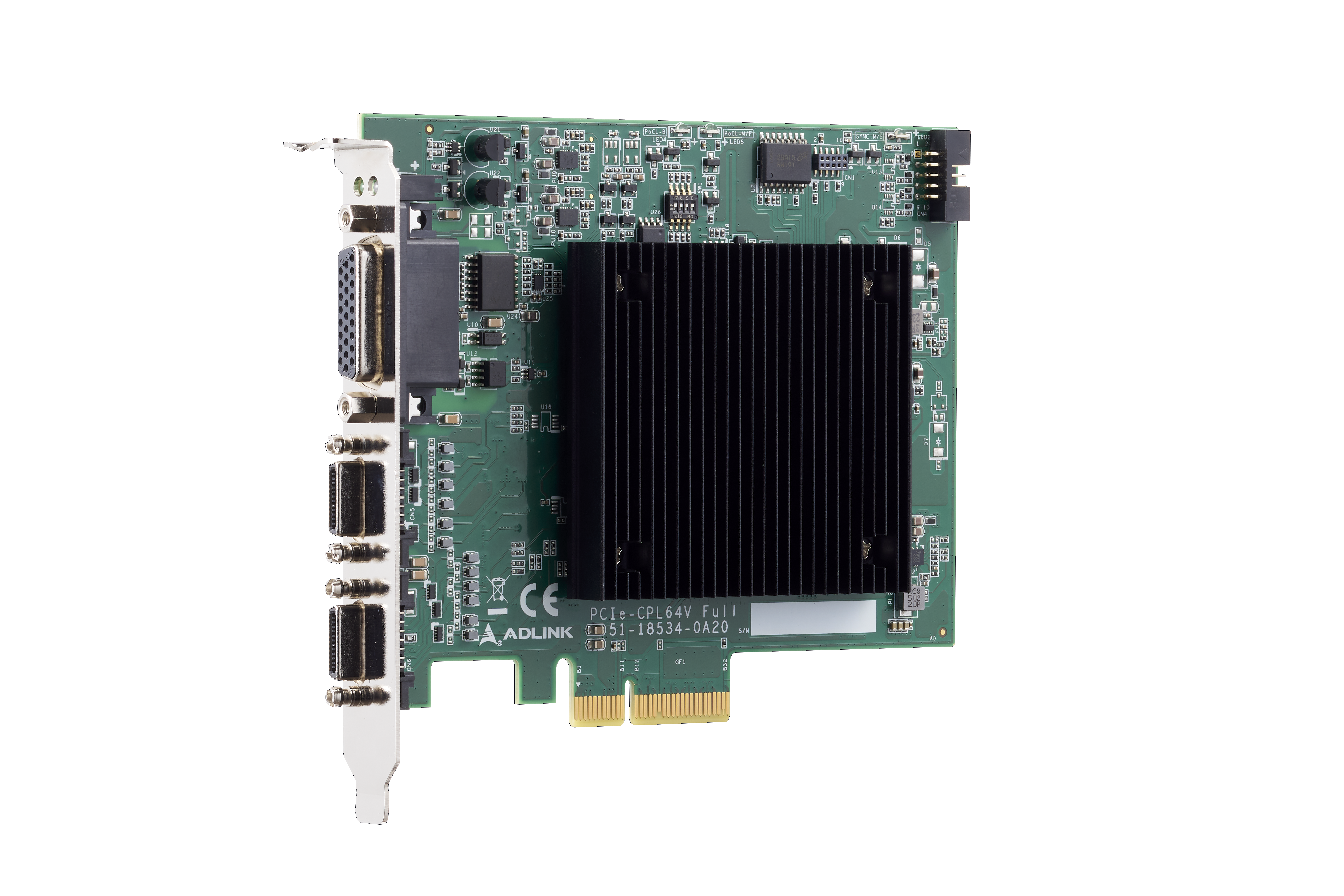

The AXE Series further extends ADLINK’s edge AI capabilities into high-end, real-time vision processing and analytics—including VLA workloads—empowering enterprises to handle the most demanding visual data streams with unmatched precision and speed.

Accelerate Intelligence with an AI-Ready Architecture

Edge-Native

Design

Compact, industrial-grade reliability with an extended product lifecycle

GPU

Optimized Hub

Precision-tuned power and thermal design for peak accelerator throughput

AI Ready

Platform

Certified integration for GPUs, accelerators, and frame grabbers